- Nginx Installed: If Nginx isn’t already installed, you can install it using your package manager. For example, on Ubuntu:

sudo apt update sudo apt install nginx - Domain Pointing to Your Server: Ensure your domain is correctly pointed to your server’s IP address.

- Certbot Installed: Install Certbot on your server:

sudo apt-get update sudo apt-get install certbot python3-certbot-nginx

Configuring Nginx Reverse Proxy for Each Service:

For each service, create a separate Nginx configuration file in /etc/nginx/sites-available/:

sudo nano /etc/nginx/sites-available/SERVICE_NAME

Replace SERVICE_NAME with the actual service name and BACKEND_ADDRESS with the address of your backend server.

Example for Airbyte (airbyte.logu.au):

server {

listen 80;

server_name airbyte.logu.au;

location / {

proxy_pass http://BACKEND_ADDRESS;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ~ /.well-known/acme-challenge {

allow all;

root /var/www/html;

}

}

Repeat this step for each service, customizing the server_name and proxy_pass accordingly.

Enabling Nginx Configuration:

Create symbolic links to enable the Nginx configurations:

sudo ln -s /etc/nginx/sites-available/SERVICE_NAME /etc/nginx/sites-enabled/

Testing and Restarting Nginx:

Ensure there are no syntax errors:

sudo nginx -t

If no errors are reported, restart Nginx:

sudo service nginx restart

Obtaining Let’s Encrypt SSL Certificates:

Run Certbot for each service:

sudo certbot --nginx -d SERVICE_NAME

Follow the prompts to configure SSL and automatically update Nginx configurations.

Testing Renewal and Updating DNS Records:

Test the renewal process for each service:

sudo certbot renew --dry-run

Ensure DNS records for each service point to your server’s IP address.

Verifying HTTPS Access:

Access each service via HTTPS (e.g., https://airbyte.logu.au). Ensure the SSL padlock icon appears in the browser.

Repeat these steps for each service, replacing SERVICE_NAME with the respective service’s domain.

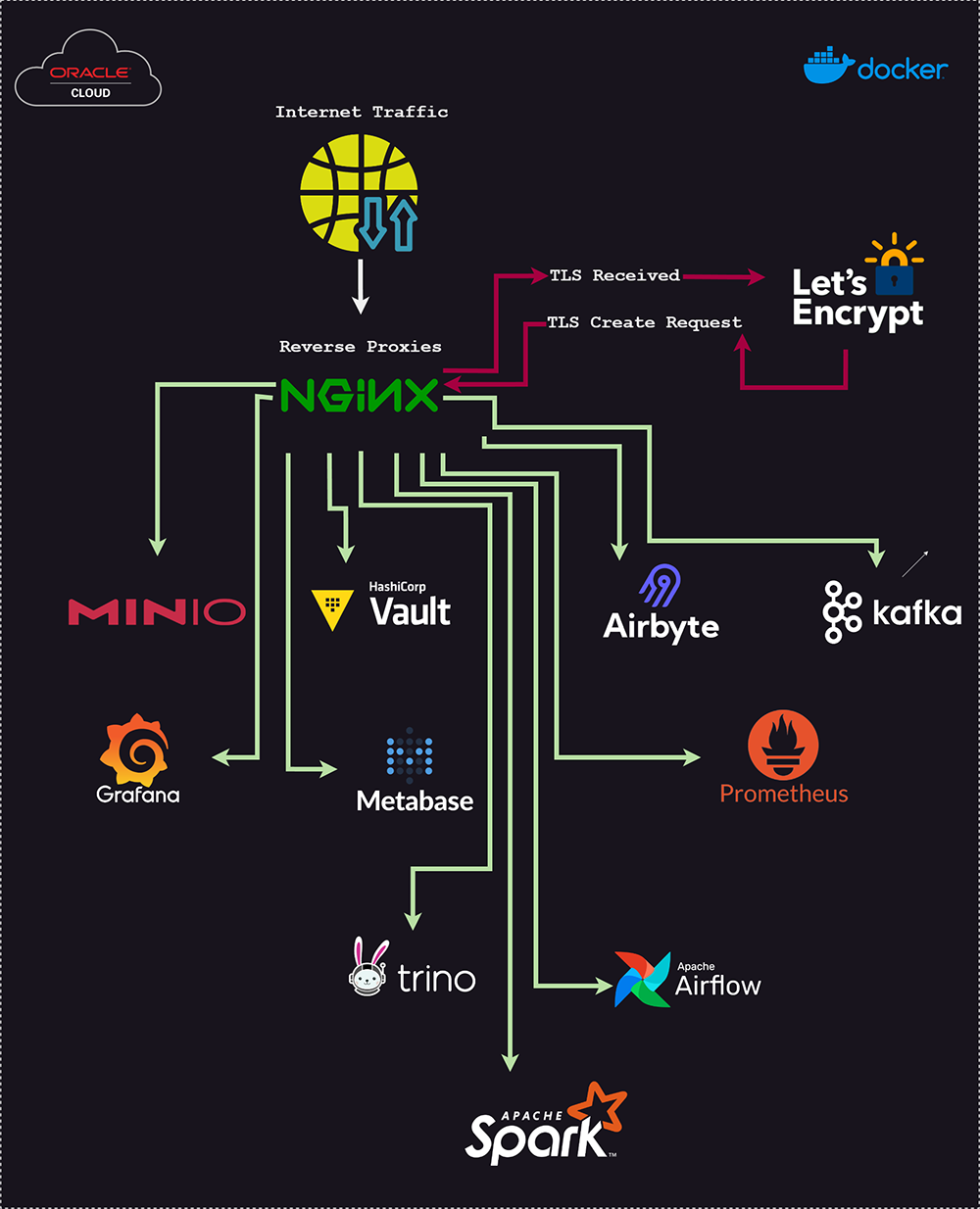

By following these steps, you’ll have a secure setup with Nginx acting as a reverse proxy and Let’s Encrypt providing SSL certificates for each service. Adjust configurations as needed based on specific service requirements. Stay secure and enjoy your enhanced web services!